How to Verify and Validate Backup Files and Folders

For detailed product information, please visit the BackupChain home page.

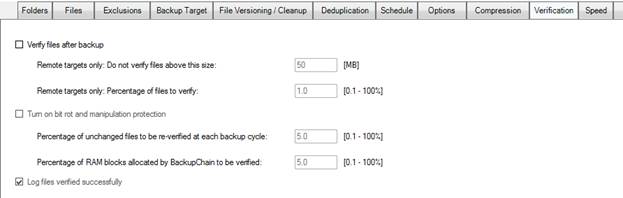

The verification tab offers various features to protect your backups:

Note that backup verification will slow down your backups considerably because the data has to be read back from the target media. In addition, the access reading back will be slower than usual because the Windows disk cache will be turned off for those files and by doing that, several optimizations in Windows that speed up file access will not be available, when accessing those particular files (this slower access affects only the file being verified and is not system-wide).

However, backup verification is crucial when handling critical data. When a file is backed up, its backup file, which may be in a different format than the original file, such as a ZIP archive, is read back and checked. For remote targets, the above option offers a max size limit to prevent downloading very large files for the purpose of verification, as well as a percentage limit. Using the percentage limit, you can limit, for example, verification to just 5% of all new files to keep backups finishing faster.

This percentage limitation makes a lot of sense, especially for very large file server backups and in combination with the second feature “turn on bit rot and manipulation protection”.

Reverification / Validation of backup files

Files at the target may become damaged without your knowledge. Even on RAID controllers utilizing mirror configurations, files and sectors may become corrupt without the controller noticing. RAID controllers usually do not compare sectors while reading between several drives. A hardware fault can hence go undetected for quite some time. In addition, RAM chips can corrupt data. RAM contained in hard drives as well as server RAM, despite ECC technology, can be damaged and the damage can go undetected for quite some time without producing any noticeable symptoms. For example, ECC RAM can be damaged in a way that causes bytes to be written correctly but to the wrong address due to damage in the chip’s internal address bus. The nature of ECC does not offer protection against such and other types of defects. Furthermore, malicious software may encrypt, and sometimes disgruntled employees, may vandalize backup files.

To catch such possibilities within reasonable time and use

minimal resources in doing so, BackupChain

offers a percentage based resource coverage option. You can specify to test a fraction

of RAM used for I/O against RAM defects and a fraction of files to be re-verified.

In case of remote targets, the size options of the upper half of the screen are

also taken into consideration when re-verifying.

Re-verifying backup files involves (downloading and) reading back the entire backup file and checking for internal consistency, for example using checksums. This can also be done with previous backup versions of a file that has changed since.

Coverage of Re-verification

By processing only a fraction of files to be re-verified, on a per backup cycle basis, you spread the work over many backup cycles, to keep the amount of additional time required at a minimum. This idea assumes that all files are roughly average size and that the backup task in fact runs periodically. In addition, another assumption is that excessive re-verification of files will not help beyond a certain point but that choice is yours. You can set up tasks to re-verify 100% of all files each time, if you the impact it doesn’t prolong the backup cycle too much. The advantage of a 100% reverification is that you will be notified immediately if the quality of your backup media is affected.

If you choose to re-verify, say 5% of files, it will take on average about 20 backup cycles to cover all files once. If the backup task is scheduled to run daily, that would be a 20 day cycle where each and every backup file has been checked again, on average. Whether that’s feasible depends on many factors, such as overall backup time available, the total number of files and their size, and access and I/O speeds involved.

Re-reading files actualizes sectors

A great feature of modern and enterprise grade hard drives, whether used in a RAID or not, is that reading sectors involves many internal checks to occur within the hard drive. In addition to BackupChain checking the consistency of backup files, the hard drive itself re-evaluates the quality of a given sector and may decide to relocate the data on that sector on a spare internal sector if need be.

Benefits of Verification and Validation

A lot of users intuitively prefer to keep backup time short as possible, but their underlying notion is often that digital storage is somehow perfect and can’t have defects, not even partial defects. In the case of backup verification, prolonging the backup process is unavoidable; however, when data has a very high value for an organization one should consider the benefits and the risks that are being covered.

Let’s examine a few potentialities. Hard drives can fail completely or just partially. There is a very wide spectrum of data loss between the time when a hard drive internally detects hardware issues and when it actually reports it to the controller, and then to the OS. Losses can occur before there is a chance for the hard drive to report them.

When files are processed on a server, the data travels through various memory chips. Apart from the main server RAM, some RAM is encapsulated in controllers and hard drives and other equipment. When a file is loaded and saved, it travels through various RAM cells and chips. If just one of them has a defect, it will corrupt the chunk of data that was carried within it. RAM damage is not as uncommon as widely believed, partly because RAM damage and other forms of bit rot can go undetected for years, unless it is specifically investigated, for example by using RAM checker software. In some “lucky” circumstances, the server might blue screen and spontaneously reboot. Even then, the warning signs of a RAM defect are often overlooked and mistaken for something else, such as a software defect or driver problem. RAM defects are extremely difficult to notice and pinpoint without talking the server offline and specifically investigate for them.

ECC RAM offers some protection against single bit failures, but not all types of RAM damages. As mentioned above, multiple bit failures or damage to the RAM chip’s handling of memory addresses (address bus) can cause damage that is difficult to spot even for many RAM checker software. In data files, these types of RAM damage effects may show up as random characters in random areas of the file, or as missing or overwritten characters. Address bus damage in particular can bypass a range of checks because a valid data word in written to RAM but to the wrong address. There have been numerous reports of such hardware damage from our customers. The common denominator was that many files got corrupted without notice for many months before the issue was even noticed. A RAM defect affecting a centralized file server is especially dramatic since the server handles all files for wide range of users on a daily basis. Every time a file is read or saved and travels through defect RAM, it might get corrupted on the way in or out.

Bit rot occurring inside hard drives can also slip through various checks that are in place in the hardware and software components of a server. But even if a sector is reported as bad by a hard drive, the user will be unaware of the problem until the file is actually being read. I.e. a sector that belongs to a file might go bad but if the file is never read back in full, this will never be noticed. In mirror RAID devices, a corrupted sector will also go unnoticed unless the sector is in fact reported bad by the drive to the controller. If bit rot has occurred inside main server RAM, the file will be corrupted on the way to the drive, unnoticed, unless it is read back and verified.

Reverification, hence, alerts you to the possibility of a hardware fault that would otherwise go undetected. By reverifying, BackupChain causes the file to be read back and this gives hard drives the opportunity to reevaluate the health of each sector the file is stored on. In addition, some of the RAM that is being used in the process is indirectly being checked as well. In some cases, some modern enterprise-grade hard drives are capable to recognizing a frail sector and internally move the sector to a set of spare internal sectors. But this process only occurs if the entire drive is scanned specifically for that purpose or if the file is read back in full.

Ransomware is known to also encrypt backup files. If backups are stored on a vulnerable device or have been somehow affected by an infected device, the fact that the backup files are damaged can potentially go unnoticed for quite some time. For more intensive protection against the special case of ransomware, please contact our support team for additional recommendations.

More Information

For more information on our Windows PC and Windows Server backup solution, please visit BackupChain.

Backup Software Overview

The Best Backup Software in 2026 Download BackupChain®BackupChain Backup Software is the all-in-one Windows Server backup solution and includes:

Server Backup

Disk Image Backup

Drive Cloning and Disk Copy

VirtualBox Backup

VMware Backup

FTP Backup

Cloud Backup

File Server Backup

Virtual Machine Backup

Server Backup Solution

Hyper-V Backup

Popular

- Best Practices for Server Backups

- NAS Backup: Buffalo, Drobo, Synology

- How to use BackupChain for Cloud and Remote

- DriveMaker: Map FTP, SFTP, S3 Sites to a Drive Letter (Freeware)

Resources

- BackupChain

- VM Backup

- Knowledge Base

- BackupChain (German)

- German Help Pages

- BackupChain (Greek)

- BackupChain (Spanish)

- BackupChain (French)

- BackupChain (Dutch)

- BackupChain (Italian)

- BackupChain Server Backup Solution

- BackupChain is an all-in-one, reliable backup solution for Windows and Hyper-V that is more affordable than Veeam, Acronis, and Altaro.