How to: Speed and Bandwidth Throttling for Backup Tasks

For detailed product information, please visit the BackupChain home page.

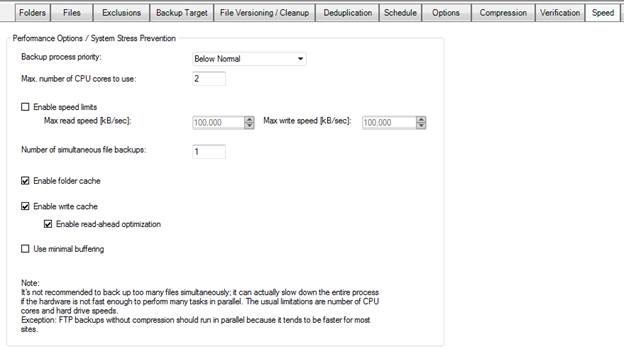

Many of our customers spent well over $100,000 on server hardware and hence it is no surprise we have received numerous customer requests to provide more options to allow them to fine-tune backup speed. The speed tab is where most of the speed related options are available.

There is often a need to either limit speed or the inverse, to make full use of all available resources in order to keep the backup cycle as short as possible.

Specifying Resource Allocation Limits / System Stress Prevention

There are several ways to reduce the usage of system resources, such as RAM and CPU. A lower resource usage usually results in a slower backup process but keeps the system responsive to other services and programs.

The backup process priority controls the priority of the background BackupChain process relative to all other processes on the system, including Windows itself. We recommend using a low setting unless you are running BackupChain at a time where no other service needs to remain responsive.

“Max number of CPU cores to use” limits BackupChain’s CPU usage and also helps saving RAM since only a certain number of workers will be active at a time. Entering all available CPUs leads to full CPU utilization; however, this depends on additional factors, such as the number of simultaneous file backups and the number of deduplication workers used (in Deduplication tab). The limit is automatically lifted if you have parallel file backups or if you use more than one deduplication worker.

“Enable Speed Limits” activates the read and write input/output speed limits. Use these options to limit the transfer speeds to hard drives, FTP, or network shares. This is useful to prevent “clogging” or network and Internet lines, but it also helps reducing the stress on your hard drive.

It is in your best interest to avoid straining your system to prevent system overload and hard drive stress. To keep your system responsive it is recommended to use only a percentage of the actual throughput rates. Most of today’s hard drives can deliver a constant read / write speed of 20 to 50MB/sec with much higher burst rates; however, running a hard drive consistently at fast rates for a long time increases its temperature and decreases its life expectancy.

Simultaneous File Backups

“Simultaneous file backups” implies that BackupChain can parallelize file backups within a task.

Note, however, it’s strongly recommended not to use this feature unless you know your hardware well and you have selected a specific backup set where it makes sense to use parallelization.

Note: If you configure a large number of files to be backed up simultaneously the entire process may actually take longer than with sequential backups if it is not configured correctly. If handling files one by one is in fact using all resources to their maximum throughput, adding additional files in parallel will only make the entire process slower. Parallel backups make sense, for example, if considerable time is spent compressing a file. A second file could run a separate CPU core (in the case of the ZIP format where only one core can be used per file). Or, for example, a second file could be uploaded while the other is still being prepared. Trying to push many files through the same network connection is generally not recommended; an exception is the case where you upload files to a remote WAN server, where each link may be throttled due to external networks and it’s beneficial to use multiple upload streams for better overall throughput.

Background info on hard drives

Mechanical hard drives are built using rotating disks and heads that move back and forth to read and write data. A modern mechanical hard drive is optimized to give you good average read and write data throughput in terms of streaming, and good burst speeds when small files are read or written.

If the heads need to move a lot, also called ‘seek time’, you will end up with an enormous degradation of performance. Moving a hard drive head is very wasteful and takes a relatively long time of several milliseconds. Note that solid state disks do not use mechanics and hence do not have this disadvantage.

If you back up a lot of files from the same hard drive, chances are the heads will need to move back and forth. If the CPU in the system is the bottleneck and you are using ZIP compression, or if you use a slow FTP upload link, it may actually make sense to multitask and back up several files at a time. But if the backup target is fast and CPU speed is sufficient as well, the backup will run slower when more than one file is backed up at a time.

All hard drives also have cache space. If you read and/or write many files in parallel the cache is shared and hence its usefulness is minimized. The best hard drive speed is achieved when large files are read and written in long streams with almost no head movement. In that case the cache is also used efficiently as read ahead cache.

Ethernet Background Info

Ethernet networking is actually one of the most inferior designs in networking; yet, it is the most widespread and lowest cost technology.

The key thing to know about Ethernet is that its performance is reduced exponentially when more than one node on the bus starts transmitting. Packets collide and lead to long delays each time an additional node wants to ‘speak into the wire’.

If your backups are taken from a network server or they are being sent to a network device you need to understand that the backup traffic will most likely max out all available network bandwidth. For that reason we offer a Speed Limit for Read Speed and Write Speed. You may have to limit the backup speeds to match a fraction of your network speed, in order to ensure the network remains operational to other computers on the network.

The above may also become extremely critical in a cluster shared volumes environment or failover cluster setting.

In a network setting you probably would not want simultaneous backups at all.

When to use Simultaneous Backups

The short answer is: if the hard drive or network is NOT the bottleneck.

If your CPU is relatively slow but the hard drives are very fast, as the case with many servers that are optimized for data transfer rather than computations, it makes sense to use more than one backup in parallel.

Note that BackupChain’s deduplication algorithm parallelizes on its own (you can specify more than one deduplication worker in the Deduplication tab). So usually there is no need to run several deduplications simultaneously.

Obviously simultaneous backups make sense when many CPU cores are available and idle. In case of virtual machine backups, chances are you are using deduplication. Then it would most likely be better to do sequential file backups and increase the number of deduplication workers.

Another typical example is FTP. If your FTP target uses load balancing and severely limits upload bandwidth per link, you could bypass that by uploading several files at a time.

Another typical parallelization example is ZIP. ZIP, by its nature, cannot be parallelized. So if your hard drives are really fast but one single CPU core is relatively slow (this is the case with almost all multi core server CPU systems) it also makes sense to back up several files at a time.

Folder Caches, Read-ahead, and Buffering Options

The speed tab also offers options to enable folder cache, write cache, read-head optimizations, and the option to keep buffer to minimal size.

As with all algorithms, there are pros and cons and in happens as a consequence of the nature of an algorithm that in certain environments it does not perform well. A cache works miracles with cache hits occur often and a cache miss is rare, but these events greatly depend on the data being backed up and the server environment and hardware.

Most users will not need to alter these settings; however, there are certain scenarios where performance can be improved by changing the configuration.

Enable Folder Cache

This option minimizes the lookup of files through internal caches. If you use remote backups (FTP) that do not use a BackupChain FTP Server with remote scan capability, you will want to switch this option off.

BackupChain FTP Servers with remote scan capability use a database of all server-side files that is sent down to the client. This one time operation eliminates all file lookups thereafter and significantly reduces backup time when large file servers are being backed up. As other FTP server products do not offer such a feature, you will want to turn it off if you do not use a BackupChain FTP server.

Write cache & read-ahead optimization

Microsoft Windows includes some clever algorithms to cache file access and I/O in general, and most of the time these algorithms produce good results. There are some specific use-cases that result in bad performance and even make the system unstable, such as when Windows runs out of memory due to a bug in the caching algorithm. To our knowledge these bugs in Windows persisted at least until Windows Server 2016. To address these rare issues in Windows you can turn off write cache and read-ahead optimization. Note that in general you will want to keep these switched on for better performance.

Minimal buffering

Faster I/O benefits from larger buffers; however, some servers are to run with limited RAM resources. In those scenarios you will want to enable minimal buffering to reduce RAM consumption and peaks, at the potential cost of some performance degradation.

More Information

Bandwidth throttling and speed limits may be configured using BackupChain Backup Software backup tasks, such as Hyper-V VM backup, VMware backup, VirtualBox backup, disk to disk cloning, disk imaging, and Windows Server backup.

Backup Software Overview

The Best Backup Software in 2026 Download BackupChain®BackupChain Backup Software is the all-in-one Windows Server backup solution and includes:

Server Backup

Disk Image Backup

Drive Cloning and Disk Copy

VirtualBox Backup

VMware Backup

FTP Backup

Cloud Backup

File Server Backup

Virtual Machine Backup

Server Backup Solution

Hyper-V Backup

Popular

- Best Practices for Server Backups

- NAS Backup: Buffalo, Drobo, Synology

- How to use BackupChain for Cloud and Remote

- DriveMaker: Map FTP, SFTP, S3 Sites to a Drive Letter (Freeware)

Resources

- BackupChain

- VM Backup

- Knowledge Base

- BackupChain (German)

- German Help Pages

- BackupChain (Greek)

- BackupChain (Spanish)

- BackupChain (French)

- BackupChain (Dutch)

- BackupChain (Italian)

- BackupChain Server Backup Solution

- BackupChain is an all-in-one, reliable backup solution for Windows and Hyper-V that is more affordable than Veeam, Acronis, and Altaro.